Best Six ETL Tools in 2025

Modern data teams juggle dozens of sources, from SaaS applications and log streams to on-premise databases, yet executives still expect near-real-time insight. That’s where a solid ETL platform earns its keep: it automates the heavy lifting of extracting disparate data, transforming it into a clean, analytics-ready shape and loading it wherever your analysts live.

We evaluated today’s leading solutions on versatility, performance, pricing transparency, and ease-of-use, then narrowed the field to six stand-outs that cover every scenario from enterprise-grade workloads to nimble, low-code deployments.

Altova MapForce 2025

Best all-around ETL solution

Altova MapForce is an any-to-any ETL tool that supports all data formats prevalent in 2025. It features an easy-to-use, low code visual interface for defining data integration and ETL projects. MapForce supports all data formats in a single version and does not require purchasing additional connectors: JSON, XML, PDF, CSV, EDI, relational databases, NoSQL databases, Excel, XBRL, Shopify/GraphQL, etc.

Altova MapForce offers scalable automation options and is the most affordable ETL solution available today.

Features:

- AI-ready ETL tool

- Graphical, low code ETL definition

- Pre-built connectors for any data source

- Drag and drop data integration

- Pre-built connectors to databases, files, APIs, etc.

- Supports all SQL and NoSQL databases

- Extensive EDI support

- MapForce PDF Extractor

- Connects to files, APIs, and more

- Visual function builder

- Built in data transformation debugger

- Instant data conversion

- High performance automation

Pros:

- All data formats supported in one version: no additional connectors required

- Low code and highly customizable

- Effective for enterprise as well as smaller orgs

- Highly affordable

Cons:

- Desktop tool is Windows only

AWS Glue

Best ETL as a service

AWS Glue is a server-less ETL service that can be used for analytics, machine learning, and application development. AWS Glue functionality can be augmented by other Amazon products such as Amazon Athena, Amazon EMR, and Amazon Redshift Spectrum.

Features:

- Visual drag-and-drop interface

- Automatic code generation

- ETL job scheduling

- Tools for building and monitoring ETL pipelines

- Automatic data and schema discovery

- Scales automatically

Pros:

- Scales easily

- Serverless

- Automated data schema recognition

Cons:

- Steep learning curve

- Extra-cost connectors for additional databases

- Lack of integration outside AWS environment

IBM DataStage

Best in the IBM ecosystem

IBM DataStage ETL software is designed for high-volume data integration supported by load balancing and parallelization. Connectors include Sybase, Hive, JSON, Oracle, AWS, Teradata, and others.

DataStage also integrates with other components in the IBM Infosphere ecosystem, allowing users to develop, test, deploy, and monitor ETL jobs.

Features:

- SaaS

- Visual interface

- Metadata exchange using IBM Watson Knowledge Catalog

- Pipeline automation

- Pre-built connectors

- Automated failure detection

- Distributed data processing

Pros:

- Handles large volumes of data

- Extensive tech support

Cons:

- Requires SQL and BASIC expertise

- Expensive

Informatica

Best for very large enterprises

Informatica is an ETL tool designed for very large enterprises or organizations featuring no-code and low-code functionality. It has a wide range of connectors for cloud data warehouses and lakes, including AWS, Azure, Google Cloud, and SalesForce.

Primarily utilized for retrieving and analyzing data from various data sources to build enterprise data warehouse applications, Informatica supports ETL, data masking, data replication, data quality checking, and data virtualization.

Features:

- Cloud-based ETL

- Data and application integration

- Data warehouse builder

- Mapplets for code re-use

- Centralized error logging

- Metadata repository

- High-performance for big data

Pros:

- Handles large volumes of data

- Connectivity to most database systems

- Graphical workflow definition

Cons:

- Expensive: overall cost + extra fees for connectors

- Complex and somewhat outdated UI and deployment process

- Lack of job scheduling options

Oracle Data Integrator

Best for the Oracle ecosystem

Oracle Data Integrator supports ETL of structured and unstructured data and is designed for large organizations that run other Oracle applications. It provides a graphical environment to build, manage, and maintain data integration processes in business intelligence systems.

Features:

- Pre-built connectors

- Big data integration

- Supports Oracle databases, Hadoop, eCommerce systems, flat files, XML, JSON, LDAP, JDBC, ODBC

- Integration with other Oracle enterprise tools

Pros:

- User-friendly interface

- Parallel execution increases performance

- Handles large volumes of data

- Well integrated in Oracle ecosystem

Cons:

- Expensive

- Requires extensive Java expertise

- Lacks real-time integration options

Talend Open Studio

Best for basic ETL tasks

Talend Open Studio is open source ETL software with a drag-and-drop UI for defining data pipelines. Then the software generates Java and Perl code.

Talend Open Studio can integrate with other Talend extensions for data visualization, application and API integration, and other functionality. ETL jobs can be run within the Talend environment or executed as standalone scripts.

Features:

- Graphical interface

- Data profiling and cleansing

- Integrates with third-party software

- Automates data integration with wizards and graphical elements

Pros:

- Easy-to-understand UI

- Comprehensive connection options

- Community and company support

Cons:

- Changes to a job require code changes

- Does not handle large amounts of data

- Debugging is difficult

What is an ETL Tool?

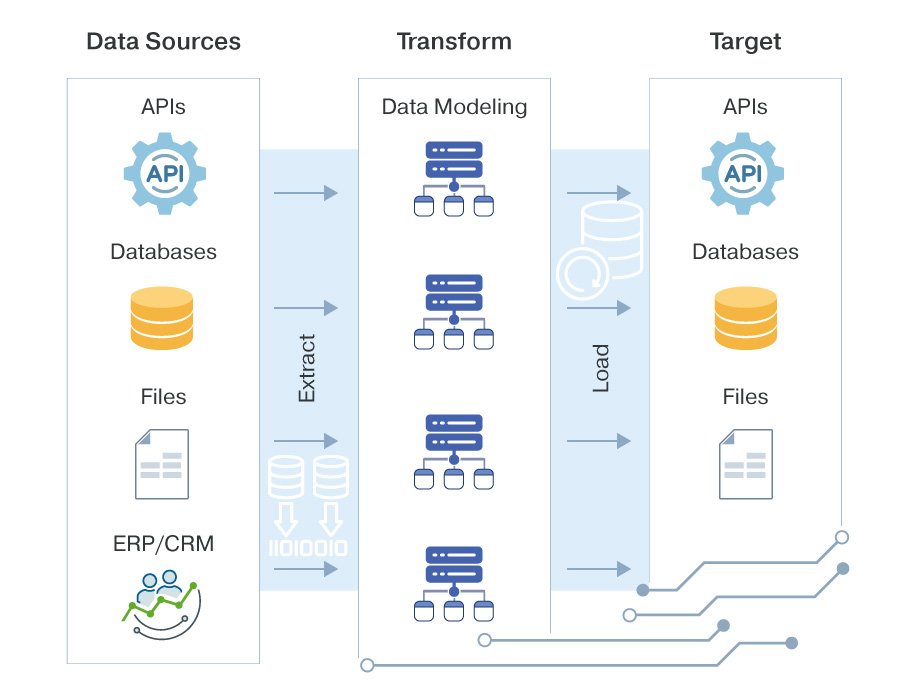

ETL (Extract, Transform, Load) refers to a data integration process in which data is extracted from a source, transformed to a particular format, and loaded into a target database.

Businesses today process an overwhelming amount of data from various siloes, making it increasingly difficult to extract meaningful insights from that data. ETL plays a crucial role in collecting, normalizing, and organizing incoming data so that it can be easily analyzed and used for business intelligence, reporting, decision-making, and other data-driven activities.

Defining ETL processes manually is time consuming and error prone, requiring extensive coding. ETL tools are software designed to automate the extract-transform-load process, consolidating data from disparate sources and transforming it for storage in a target system. The best ETL tools abstract away the complexities of data integration by providing a user-friendly interface to design, manage, and execute ETL workflows. They help businesses ensure data accuracy and improve efficiency along the way.

In many business systems, new information intended for import often arrives in a data format incompatible with the existing repository. ETL tools perform the following steps to ready the data for storage and further processing:

- Extract: In this step, data is extracted from one or more source systems, which can include SQL or NoSQL databases, e-commerce systems, Excel spreadsheets, APIs, and more. These systems often export data in formats such as XML, JSON, PDF, CSV, EDI, and others, all of which will be transformed to a uniform format in the next step.

- Transform: To make sense of data once it is extracted, it often needs to be transformed to a normalized format. This can involve cleaning the data (enforcing business logic, correcting errors, handling missing values), aggregating data, enriching it with additional information, and converting the data to a standardized format. Transformation is a critical step to ensure data quality and consistency.

- Load: After the data has been extracted and transformed, it's loaded into a repository such as database or data warehouse where businesses utilize it further. Or, it may be directly loaded into tools for further analysis (e.g., Excel files) or loaded via APIs.

Why Are ETL Tools Needed?

Without ETL tools, knowledge workers may spend more time collecting, combining, and converting data from various sources than actual data analysis. Because they speed up and simplify the process, ETL tools are critical in today’s data-centric business landscape.

ETL tools can be used for several overlapping purposes:

- Data integration: Many businesses deal with data spread across multiple sources, such as EDI messaging systems, marketing platforms, sales databases, and more. ETL helps integrate data from these varied sources into a unified and organized format.

- Data warehousing: ETL is a foundational step for building data warehouses. Data warehouses store historical and current data from various sources, allowing for complex queries and reporting.

- Business intelligence and reporting: ETL processes consolidate and prepare data for business intelligence and reporting tools and remove the need for time-consuming manual data transformation.

- Decision-making: Timely access to reliable data is crucial for making strategic decisions. Through efficient automation, ETL tools ensure that relevant and up-to-date data is available for analysis and decision-making.

- Regulatory compliance: In industries with strict regulatory requirements, ETL workflows can be defined such that data is processed, handled, and stored in compliance with regulations.

- Operational efficiency: By automating the process of data extraction, transformation, and loading, ETL tools save time and reduce manual data entry errors.

- Scalability: As businesses grow and accumulate more data, ETL processes can be scaled to handle larger volumes of data while maintaining performance.

By driving efficiencies, ETL tools businesses harness the power of their data and gain a deeper understanding of their operations and customers.

How Do ETL Tools Work?

ETL tools are commonly used in data integration, data warehousing, and business intelligence scenarios. Here's a closer look into how ETL tools work:

-

Extract:

- Connectivity: ETL tools provide connectivity in different ways. Some offer connectors and adaptors that are sold individually while others provide connectivity to all prevalent data formats without extra fees. Regardless of the approach, ETL tools provide mechanisms for connecting to data sources used by today’s business systems, such as relational databases, Excel spreadsheets, APIs, flat files, and so on.

- Data retrieval: The tool retrieves data from the source systems based on defined extraction criteria. This might involve specifying tables, views, or queries to pull the required data.

-

Transform:

- Data mapping: During data transformation, fields from the source are mapped to corresponding fields in the target, ensuring data consistency

- Data conversion: Data might need to be converted into a standardized format or unit of measurement.

- Data combining: ETL software allows organizations to combine data from multiple diverse sources and transform it to a uniform target data structure.

- Data cleaning: ETL tools can clean and validate data by removing duplicates, correcting errors, and handling missing or inconsistent values.

- Data enrichment: Additional data from external sources, such as AI systems, can be added to enhance the existing data.

- Data aggregation: ETL tools can perform calculations and aggregations on the data, such as summing, averaging, or counting records, as well as more complex calculations.

-

Load:

- Target repository: ETL tools can load data into a variety of target data structures, including files, APIs, databases, and data warehouses where the data can be further used for analysis, querying, reporting, or other applications.

- Loading strategies: ETL tools offer different loading strategies. Full load replaces all existing data in the target with new data. Incremental load only adds new or modified data since the last load. Delta load handles changes that occurred in a specific time frame. The best ETL tools give users the ability to choose the best strategy.

- Error handling: ETL tools can handle errors during loading, such as data type mismatches or constraints violations. They might log errors and allow users to address them.

-

Automation and scheduling:

- Job scheduling: ETL tools provide scheduling capabilities, allowing users to set up automated data extraction, transformation, and loading at specified intervals (daily, weekly, etc.).

- Automating ETL: Automated processes ensure that data is kept up to date and relevant for analysis.

-

Performance optimization:

- Advanced functionality: ETL tools might offer optimization features to enhance performance, such as data streaming, parallel processing, bulk database inserts, and so on.

Transforming Data for ETL

How to Choose the Best ETL Tool?

The choice of best ETL is a combination of your organization’s data requirements and budgetary constraints. For future proofing, it is advisable to choose an ETL tool that supports most prevalent data formats without charging for additional connectors, is scalable as requirements change, and offers an easy trial period that lets you evaluate the tool before making a purchasing decision.

When it’s time to evaluate ETL software, here are a few criteria to keep in mind:

- Does it support the data formats you require?

- Is the interface user-friendly?

- How steep is the learning curve and is training required?

- Is it easy to update solutions as requirements change?

- Is pricing clear as my solution scales?

- Is the solution affordable?

- How can I get support?